OpenAI Introduces Gemini Live-like Advanced Voice Mode for ChatGPT

In a move poised to reshape how users interact with AI, OpenAI has begun the rollout of a groundbreaking Advanced Voice Mode for ChatGPT. This feature, reminiscent of Google’s Gemini Live capabilities, brings seamless, natural voice conversations to the forefront, significantly enhancing the user experience and opening doors to a broader range of applications. The rollout, initiated in late September 2023, is being conducted in phases, gradually granting access to a wider user base.

This development signifies a pivotal moment in the evolution of conversational AI, as it addresses a key limitation of text-based interaction – the lack of naturalness and nuance inherent in typed communication. With Advanced Voice Mode, users can engage in dynamic, lifelike dialogues with ChatGPT, leveraging the power of their voice to express intent, emotion, and context. The implications are far-reaching, from enhancing accessibility for those with limited mobility to revolutionizing how we interact with AI-powered assistants in our daily lives.

Key Features and Benefits of Advanced Voice Mode:

Natural, Intuitive Conversations: The Advanced Voice Mode leverages cutting-edge speech recognition and natural language processing technologies to enable fluid, human-like interactions. Users can now converse with ChatGPT as they would with a friend, making the experience more engaging and accessible.

Enhanced Accessibility: This feature is a boon for users with visual impairments or limited dexterity, empowering them to interact with ChatGPT effortlessly through voice commands.

Multi-Tasking Made Easy: By enabling voice control, Advanced Voice Mode frees users from the constraints of keyboard and mouse, allowing them to multi-task while interacting with ChatGPT. This is particularly useful for scenarios where hands-free operation is essential, such as driving or cooking.

Personalized Experience: The system is designed to learn and adapt to individual users’ voices and communication styles, creating a more personalized and tailored experience over time.

Broader Applications: The introduction of voice capabilities opens up a world of possibilities for integrating ChatGPT into various domains, from customer service and education to healthcare and entertainment.

The Technology Behind Advanced Voice Mode:

OpenAI’s Advanced Voice Mode is powered by a sophisticated blend of technologies, including:

State-of-the-art Speech Recognition: This technology accurately transcribes spoken language into text, enabling ChatGPT to understand user queries and commands.

Natural Language Understanding (NLU): NLU enables ChatGPT to interpret the meaning and intent behind user input, even in the context of complex or nuanced conversations.

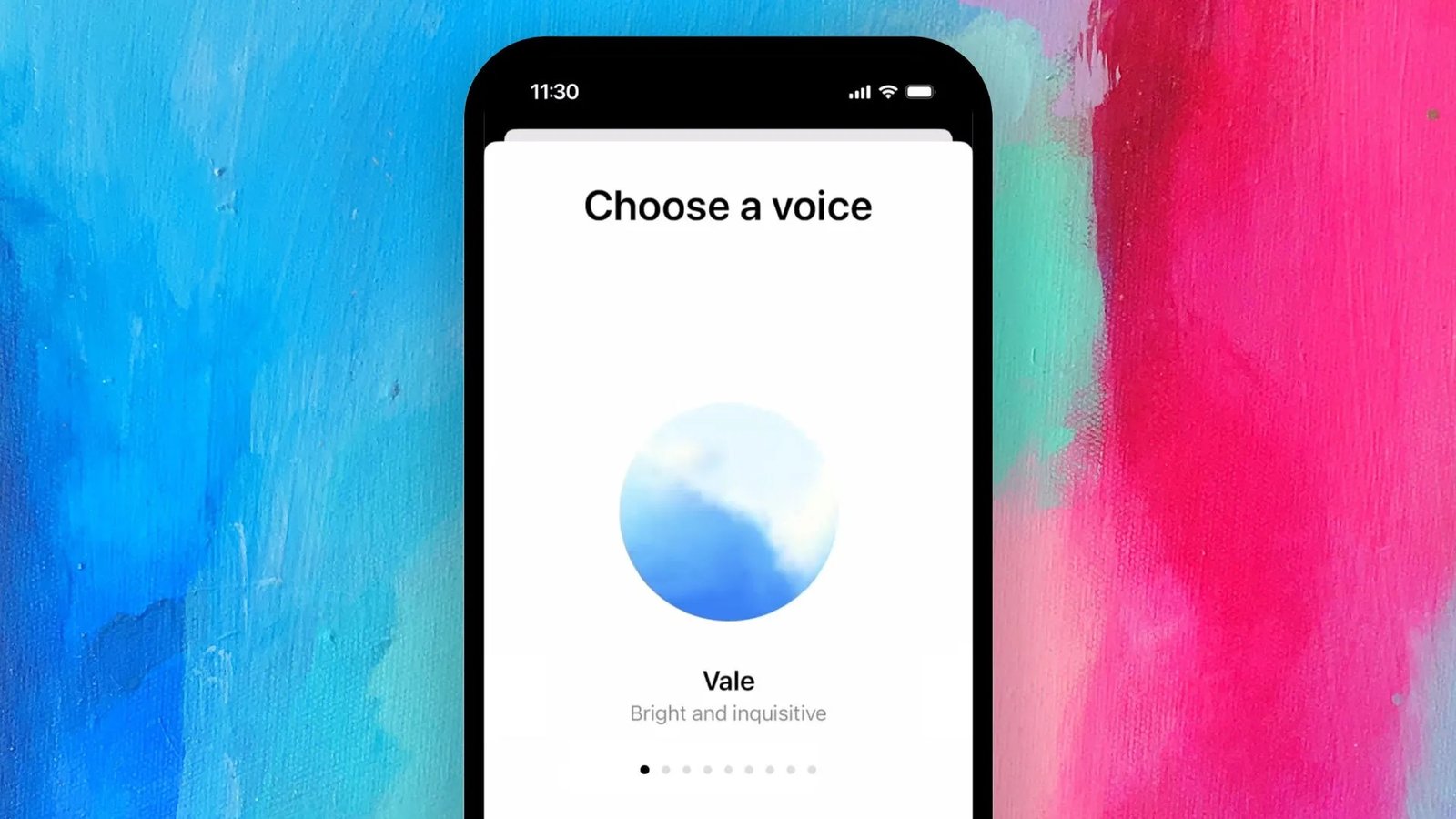

Text-to-Speech (TTS): TTS generates natural-sounding responses in a variety of voices and languages, allowing users to engage in seamless voice dialogues.

Machine Learning: Continuous learning and adaptation algorithms ensure that the system improves over time, delivering increasingly accurate and personalized interactions.

The Rollout and User Experience:

OpenAI is adopting a phased approach to the rollout of Advanced Voice Mode, starting with a select group of users and gradually expanding access. Initial feedback has been overwhelmingly positive, with users praising the naturalness of the interactions and the enhanced accessibility the feature provides.

“It’s like talking to a real person,” remarked one user, while another commented on how the voice mode made interacting with ChatGPT feel “more intuitive and engaging.” Users have also highlighted the potential of this feature to transform how they interact with AI in their daily lives, from managing tasks and accessing information to simply having a conversation.

My personal experience:

As an early adopter of Advanced Voice Mode, I was immediately struck by its seamless integration and natural feel. It transformed my interaction with ChatGPT from a text-based exchange to a dynamic conversation, making it feel more like engaging with a human assistant. I found myself using it for a wider range of tasks, from brainstorming ideas to setting reminders, as the voice control offered a convenient, hands-free experience. I’m excited to see how this technology evolves and the new possibilities it unlocks for AI-powered interactions.

Implications and Future Outlook:

The introduction of Advanced Voice Mode marks a significant leap forward in conversational AI, with far-reaching implications for various sectors. It has the potential to:

Transform customer service: Enabling natural voice interactions can enhance customer satisfaction and streamline support processes.

Revolutionize education: It can provide personalized learning experiences and make educational content more accessible.

Empower individuals with disabilities: Voice control can break down barriers and provide greater independence.

Reimagine entertainment: It can create more immersive and interactive experiences across various platforms.

As OpenAI continues to refine and expand this feature, we can expect to see even more innovative applications and use cases emerge. The future of conversational AI is undoubtedly voice-enabled, and Advanced Voice Mode is a testament to OpenAI’s commitment to pushing the boundaries of what’s possible.